Claude 3 Outperforms GPT-4: A New Champion Emerges

Breaking Down the Upset in AI Chatbot Rankings

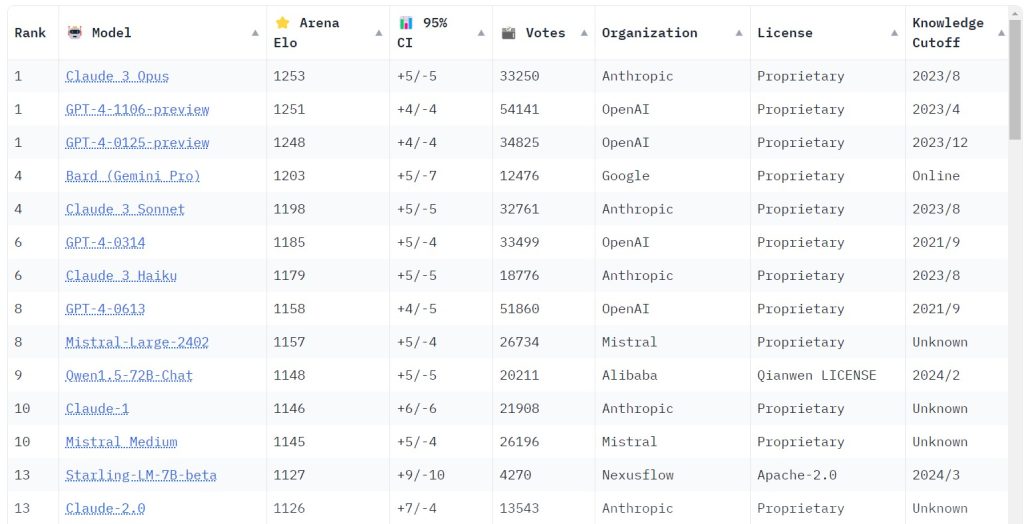

It’s been a shift in the AI landscape as Claude 3 outperforms GPT-4, taking the crown on the Chatbot Arena leaderboard. This is no small feat, considering how GPT-4 has long reigned supreme since its launch. The leaderboard, which relies on human votes to rank outputs from different models to a given prompt, has seen Claude 3 Opus edge out its competition with a blend of precision and panache that’s caught everyone’s attention.

But what does this mean for the AI community? For starters, it breaks OpenAI’s long-standing dominance and introduces a new benchmark for excellence. Claude 3’s victory heralds a new era where diversity in top-performing models isn’t just a possibility but a reality. It underscores an important point made by independent researchers like Simon Willison: “We all benefit from a diversity of top vendors in this space.”

The significance of this upset is twofold: it not only showcases Anthropic’s prowess in fine-tuning language models but also reflects the evolving nature of AI benchmarks where ‘vibes’ or user preferences play an increasingly pivotal role. As we’ve seen, standard benchmarks may not do justice to these sophisticated tools; instead, subjective user experiences are shaping up to be the yardstick for future comparisons.

What Makes Claude 3’s Victory Significant?

Claude 3’s triumph over GPT-4 isn’t just about topping a leaderboard; it marks a shift in user preference and opens up conversations about what we truly value in our digital assistants. Users like software developer Pietro Schirano highlight how easy it is to switch between these models, emphasizing user adaptability and flexibility as key factors moving forward.

Furthermore, even smaller models like Haiku are turning heads with their performance—proving that size isn’t everything when it comes to efficiency and capability. With such impressive results without the massive scale of larger models, Claude 3 Haiku exemplifies innovation at its finest—delivering top-tier performance at lower costs and higher speeds.

The Inner Workings of Claude 3 and GPT-4

Technological Innovations Behind Claude 3’s Success

Anthropic has certainly pulled off something special with Claude 3 Opus. It’s not just about being newer or having more data to chew on; it’s about thoughtful innovations and design choices that resonate with users. The tech behind Claude 3 emphasizes speed, capabilities, and context length—all crucial elements that contribute significantly to its success.

Claude’s rise through the ranks showcases an impressive understanding of what makes an AI model truly helpful—its ability to understand complex queries quickly while providing accurate responses within context. This finesse is reflected in how users rate their interactions with various LLMs (Large Language Models), choosing those that feel more intuitive over others.

Comparing the Architectures: Claude 3 vs. GPT-4

The architecture of an LLM plays a vital role in determining its effectiveness—and here lies one of the core differences between Anthropic’s offering and OpenAI’s stalwart model. While both boast extensive datasets and sophisticated algorithms underpinning them, they diverge when it comes down to their operational frameworks.

GPT-4 comes with several versions each tweaked for specific uses ensuring consistency across applications—a necessity for developers relying on stable outputs for their apps built atop OpenAI’s API. In contrast, Anthropic seems focused on delivering versatility through different sized models like Opus and Haiku—offering solutions tailored for both advanced tasks as well as cost-efficiency without compromising on quality.

Implications of Claude 3’s Triumph for the AI Industry

Shifting Dynamics in AI Research and Development

The ascent of Claude 3 signifies more than just another notch on Anthropic’s belt—it represents shifting dynamics within AI research and development spheres. Proprietary systems currently dominate rankings but open-source efforts are gaining momentum too—as seen by Meta gearing up to release Llama 3 which could shake things up further still.

This competitive environment spurs innovation across board—with companies striving not only for better performance but also greater accessibility which is crucial if we’re aiming towards democratizing AI technology.

What This Means for Users and Developers

For users, the fact that Claude 3 outperforms GPT-4 opens up new possibilities regarding digital assistance—potentially leading towards more nuanced interactions based on personal preferences rather than sheer computing power alone. Developers meanwhile might need to reassess their strategies especially if they’ve been heavily reliant on any single model thus far because as market shares fluctuate so too must approaches towards integration within apps or services.

In essence this victory doesn’t just change who sits atop some leaderboard—it nudges us all towards rethinking our relationship with artificial intelligence; challenging us all whether we’re end-users or creators—to embrace change seek diversity & foster innovation every step along way!